Please Note: This version of the software is outdated. We highly recommend using the new automated workflow. It is much more user friendly, efficient and versatile using full automation of the processing.

Latest release: May 2013

Introduction

Light sheet microscopy such as SPIM produces enormous amounts of data especially when used in long-term time-lapse mode. In order to view and in some cases analyze the data it is necessary to process them which involves registration of the views within time-points, correction of sample drift across the time-lapse registration, fusion of data into single 3d image per time-point which may require multiview deconvolution and 3d rendering of the fused volumes. Here we describe how to perform such processing in parallel on a cluster computer.

We will use data derived from the Lightsheet Z.1 a commercial realisation of SPIM offered by Zeiss. The Lightsheet Z.1 data can be truly massive and cluster computing may well be the only way to deal with the data deluge coming of the microscope.

Every cluster is different both in terms of the used hardware and the software running on it, particularly the scheduling system. Here we use a cluster computer at the MPI-CBG that consists of 44 nodes each with 12 Intel Xeon E5-2640 cores running @ 2.50 GHz and enjoying 128GB of memory. The cluster nodes have access to 200TB of data storage provided by a dedicated Lustre Server architecture. For more info on Lustre see here, suffice to say that it is optimised for high performance input/output (read/write) operations which is crucial for the SPIM data volumes.

Each node of this cluster runs CentOS 6.3 Linux distribution. The queuing system running on the MPI-CBG cluster is LSF - Load Sharing Facility. The basic principles of job submission are the same across queuing systems, but the exact syntax will of course differ.

Note on versions

The SPIM registration is a piece of software that undergoes ongoing development. The original version gathered under plugins SPIM registration has been replaced in 2014 by new set of plugins gathered under Multiview reconstruction. Moreover, the cluster pipeline has been changed to use a centralised Linux style master file. In 2015 this pipeline was reimplemented as automated workflow using the workflow manager Snakemake. Which allows to map and dispatch the workflow logic automatically either on a single maschine or on a HPC cluster. Therefore there are 4 versions available. We highly recommend using the latest version:

-

Original SPIM registration pipeline - contains the most detailed description of the cluster pipeline using SPIM registration plugins. If you do not have much HPC/Linux experience start here.

-

NEW PIPELINE - also uses SPIM registration plugins and introduces the master file, less verbose requiring some experience with command line and HPC.

-

New Multiview Reconstruction pipeline - builds on Multiview Reconstruction plugins, uses master file, written for experts

-

Automated workflow for parallel Multiview Reconstruction - automated workflow using the workflow manager Snakemake.

So, if you are new read a bit of the chapter 1 (original pipeline) to get familiar and then skip to chapter 3 (Multiview reconstruction pipeline) which is more up-to-date. To understand how the master file works refer to chapter 2 (NEW PIPELINE).

Original SPIM registration pipeline

Pre-requisites

Saving data on Lighsheet Z.1

The Lightsheet Z.1 data are saved into the proprietary Zeiss file format *.czi. Zeiss is working with Bio-Formats to make the .czi files compatible with Open Source platforms including Fiji. At the moment Fiji can only open .czi files that are saved as a single file per view where the left and right illumination images have been fused into one image inside the Zeiss ZEN software. This situation is going to change, for now, if you want to process the data with Fiji, save them in that way (TBD).

Getting familiar with Linux command line environment

It is very likely that the cluster computer does not run ANY Graphical User Interface and relies exclusively on the command line. Steering a cluster from the command line is fairly easy - I use about 10 different commands to do everything I need to do. Since the Linux command line may be unfamiliar to most biologists we start a separate Linux command line tutorial page that explains the bare essentials.

Transferring data

First we have to get the data to the cluster. This is easier said then done because we are potentially talking about terabytes of data. Moving data over 10Gb Ethernet is highly recommended otherwise the data transfer will take days.

Please note that currently the Zeiss processing computer does not support data transfer while the acquisition computer is acquiring which means that you need to include the transfer time when booking the instruments. Transferring 5TB of data over shared 1Gb network connection will take a while…

Installing Fiji on the cluster

In case you use the MPI-CBG cluster ‘madmax’ you might spare yourself some minutes and just hijack Pavel’s well maintained Fiji installation. Just skip the Fiji installation section and do not change the path to the Fiji executables (/sw/people/tomancak/packages/...) used in the example scripts shown below.)

Change to a directory where you have sufficient privileges to install software.

cd /sw/people/tomancak/packagesDownload Fiji nightly build from Fiji’s download page.

wget http://jenkins.imagej.net/job/Stable-Fiji/lastSuccessfulBuild/artifact/fiji-linux64.tar.gzor

curl -O http://jenkins.imagej.net/job/Stable-Fiji/lastSuccessfulBuild/artifact/fiji-linux64.tar.gzIn all likelihood you will need the Linux (64 bit) version (unless you are of course using some sort of Windows/Mac cluster). Unzip and unpack the tarball

gunzip fiji-linux64.tar.gz

tar -xvf fiji-linux64.tarChange to the newly created Fiji-app directory and update Fiji from the command line

./ImageJ-linux64 --update updateThe output that follows may have some warnings and errors, but as long as it says somewhere “Done: Checksummer” and “Done: Downloading…” everything should be fine.

Done, you are ready to use Fiji on the cluster.

Renaming files

We need to change the file name from a simple index to a pattern that contains the time point and the angle information.

The output files of the Zeiss SPIM look like this:

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1

ls

29072013_HisRuby_Stock1.czi

29072013_HisRuby_Stock1(1).czi

29072013_HisRuby_Stock1(2).czi

29072013_HisRuby_Stock1(3).czi

29072013_HisRuby_Stock1(4).czi

...In this example we have 5 angles. The files displayed show the first time point of this time series. The first file (does not contain a index) is the master file. This file would open all subsequent files in the Zeiss program, but also contains the first angle of the first time point. We need to give this file the index (0) in order to use it. Neglecting this file, will result in a frame shift in the data.

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1

ls

29072013_HisRuby_Stock1(0).czi

29072013_HisRuby_Stock1(1).czi

29072013_HisRuby_Stock1(2).czi

29072013_HisRuby_Stock1(3).czi

29072013_HisRuby_Stock1(4).czi

...Now we can rename the files using the following shell script. Make a script with the name rename-zeis-files.sh Modify the angles, the last index and source pattern.

#num_angles=5

angles=( 320 32 104 176 248 )

#index

first_index=0

last_index=344

#timepoint

first_timepoint=1

#source_pattern

source_pattern=../29072013_HisRuby_Stock1\{index\}.czi

target_pattern=../spim_TL\{timepoint\}_Angle\{angle\}.czi

# --------------------------------------------------------

i=${first_index}

t=${first_timepoint}

while [ $i -le ${last_index} ]; do

# a=1

# while [ $a -le ${num_angles} ]; do

for a in "${angles[@]}"; do

source=${source_pattern/\{index\}/${i}}

tmp=${target_pattern/\{timepoint\}/${t}}

target=${tmp/\{angle\}/${a}}

echo ${source} ${target}

mv ${source} ${target}

let i=i+1

# let a=a+1

done

let t=t+1

done

chmod a+x

./rename-zeiss_files.shThe files should now be named like this:

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1

ls

spim_TL1_Angle32.czi

spim_TL1_Angle104.czi

spim_TL1_Angle176.czi

spim_TL1_Angle248.czi

spim_TL1_Angle320.czi

...To check if all time points and angles of a time point are present, you can use the following script. Modify the time points and the number of angles.

#!/bin/bash

#timepoints

for i in `seq 1 72`

do

num=$(ls ../spim_TL"$i"_Angle*.czi |wc -l)

#number of angles

if [ $num -ne 5 ]

then

echo "TL"$i" TP or angles missing"

fi

doneThe script will return you the specific time points that are missing or the time points that have missing angles.

Saving data as tif

As a first step we will open the .czi files and save them as .tif. This is necessary because Fiji’s bead based registration currently cannot open the .czi files. Opening hundreds of files several GB each sequentially and re-saving them as tif may take a long time on a single computer. We will use the cluster to speed-up that operation significantly.

The Lustre filesystem on MPI-CBG cluster is made to be able to handle such situation, where hundreds of nodes are going to simultaneously read and write big files to it. If your cluster is using a Network File System (NFS) this may not be such a good idea…

We have an 240 time-point, 3 view dataset (angles 325, 235 and 280) in a directory

cd /projects/tomancak_lightsheet/Tassos/

ls -1

spim_TL1_Angle235.czi

spim_TL1_Angle280.czi

spim_TL1_Angle325.czi

spim_TL1_Angle235.czi

spim_TL1_Angle280.czi

spim_TL1_Angle325.czi

...we create a subdirectory jobs/resaving and change to it

mkdir -p jobs/resaving

cd jobs/resavingNow we create a bash script create-resaving-jobs that will generate the so called job files that will be submitted to the cluster nodes (I use nano but any editor will do. Using nano type nano create-resaving-jobs` and cut&paste the script from below into that file.)

#!/bin/bash

dir="/projects/tomancak_lightsheet/Tassos"

jobs="$dir/jobs/resaving"

mkdir -p $jobs

for i in `seq 1 240`

do

job="$jobs/resave-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=$dir -Dtimepoint=$i -Dangle=280 \

-- --no-splash ${jobs}/resaving.bsh" >> "$job"

chmod a+x "$job"

doneWe customize the script by editing the parameters inside it. One can think of it as a template that is used as a starting point to adapt to the particular situation. For instance we can change the directory dir where the data are to be found, the place where the output will go jobs, the number of time-points to process for i in $(seq 1 240) and most importantly the angle to be processed -Dangle=280. The strategy we follow here is to create jobs to process one angle at a time for all available time-points.

In order to be able to run this (and other scripts we will create further below), you might have to execute the following command: chmod a+x create-resaving-jobs. Finally execute the script by calling ./create-resaving-jobs (you will have to be in the folder containing the script).

This will generate 240 resave-<number>.job files in the current directory

/projects/tomancak_lightsheet/Tassos/jobs/resaving/resave-1.job

/projects/tomancak_lightsheet/Tassos/jobs/resaving/resave-2.job

/projects/tomancak_lightsheet/Tassos/jobs/resaving/resave-3.job

...

/projects/tomancak_lightsheet/Tassos/jobs/resaving/resave-240.job

each one of those files looks like this

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64

-Ddir=/projects/tomancak_lightsheet/Tassos

-Dtimepoint=38 -Dangle=280 -- --no-splash

/projects/tomancak_lightsheet/Tassos/jobs/resaving/resaving.bshrunning this job a any cluster node will launch fiji in a so-called virtual frame buffer (the nodes don’t have graphics capabilities enabled but we can simulate that) and then inside Fiji it will launch a BeanShell script called resaving.bsh passing it thee parameters : the directory (/projects/tomancak_lightsheet/Tassos), the time-point (38) and the angle (280).

Lets create that script in the current directory

import ij.IJ;

import ij.ImagePlus;

import java.lang.Runtime;

import java.io.File;

import java.io.FilenameFilter;

runtime = Runtime.getRuntime();

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angle = System.getProperty( "angle" );

IJ.run("Bio-Formats Importer", "open=" + dir + "spim_TL" + timepoint + "_Angle" + angle + ".czi" +

" autoscale color_mode=Default specify_range view=[Standard ImageJ] stack_order=Default

t_begin=1000 t_end=1000 t_step=1");

IJ.saveAs("Tiff ", dir + "spim_TL" + timepoint + "_Angle" + angle + ".tif");

/* shutdown */

runtime.exit(0);The t_begin=1000 t_end=1000 are parameters passed to Bio-Formats Importer. This is a hack. The .czi files think that they are part of a long time-lapse despite the fact that they were saved as single, per angle .czi. In order to trick bioformats into opening just the timepoint which contains actual data we set the time coordinate way beyond the actual length of the time-course (in this case 240). This results in Bio-Formats importing the “last” timepoint in the series which contains the data. This will change!

Now we need to create yet another bash script (last one) called submit-jobs

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 1 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

doneThis will look into the current directory for all files ending with .job (we created them before) and submit all of them to the cluster with the bsub command.

bsub -q short -n 1 -R span[hosts=1] -o "out.123345" -e "err.123456" ./resave-1.job-q shortselects the queue to which the job will be submitted (this one allows jobs that run up to 4 hours on MPI-CBG cluster).-n 1specifies how many processors will the job request, in this case just one (we will only open and save one file)-R span[hosts=1]says that if we were requesting more than one processor, they would be on a single physical machine (host).-o "out.%J"will create output file calledout.<job_number>in the current directory-e "err.%J"will send errors to the file callederr.<job_number>in the current directory${1}/$filewill evaluate to./resave-<number>.jobi.e. the bash script that the cluster node will run - see above

Lets recapitulate. We have created create-resaving-jobs that, when executed, creates many resave-<number>.job files. Those are going to be submitted to the cluster using submit-jobs and on the cluster nodes will run resaving.bsh using Fiji and the specified parameters.

So let’s run it. We need to issue the following command

./submit-jobs .the dot at the end tells submit job where to look for .job files i.e. in the current directory. What you should see is something like this

[tomancak@madmax resaving]$ ./submit-jobs .

Job <445490> is submitted to queue <short>.

Job <445491> is submitted to queue <short>.

Job <445492> is submitted to queue <short>.

....We can monitor running jobs with

bjobs -r

JOBID USER STAT QUEUE FROM_HOST EXEC_HOST JOB_NAME SUBMIT_TIME

445490 tomanca RUN short madmax n17 *ave-1.job May 1 11:36

445491 tomanca RUN short madmax n33 *ave-2.job May 1 11:36

445492 tomanca RUN short madmax n01 *ave-3.job May 1 11:36

445493 tomanca RUN short madmax n18 *ave-4.job May 1 11:36

445494 tomanca RUN short madmax n21 *ave-5.job May 1 11:36or whatever your submission system offers. At the end of the run we will have a lot of err.<job_number> and out.<job_number> files in the working directory.

err.445490

out.445490

err.445491

out.445491

....

The err.* are hopefully empty. The out.* contain Fiji log output if any. In this case it should look something like this. Most importantly in the directory /projects/tomancak_lightsheet/Tassos we now have for each .czi file a corresponding .tif file which was the goal of the whole exercise

ls *Angle280*

spim_TL1_Angle280.czi

spim_TL1_Angle280.tif

spim_TL2_Angle280.czi

spim_TL2_Angle280.tif

spim_TL3_Angle280.czi

spim_TL3_Angle280.tif

...We can remove the .czi files (rm *.czi) as we do not need them anymore (but check some of the tifs first!).

Now we must repeat the whole procedure for the other two angles (325 and 235). Open create-resaving-jobs and change 280 to 325 and follow the recipe again. There are of course ways to automate that.

On our cluster powered by the Lustre filesystem the resaving operation takes only minutes. Imagine what is happening - up to 480 processors are accessing the file system reading .czi files and immediately resaving it to that very same filesystem as tif - all at the same time. The files are 1.8GB each. Beware: this may not work at all on lesser filesystems - the Lustre is made for this.

Registration

SPIM registration consists of within time-point registration of the views followed by across time-point registration of the time-series. Both are achieved using Fiji’s bead based SPIM registration plugin. The per-time-point registration is a pre-requisite for time-lapse registration. For detailed overview see here.

Bead-based multi-view registration

The first real step in the SPIMage processing pipeline, after re-saving as .tif, is to register the views within each timepoint. We will use for that the bead based registration plug-in in Fiji. The principle of the plug-in are described here while the parameters are discussed here.

This description focuses on cluster processing and is less verbose, for details see section on resaving as the principles are the same.

In a directory jobs/registration create bash script create-registration-jobs

#!/bin/bash

dir="/projects/tomancak_lightsheet/Tassos"

jobs="$dir/jobs/registration_integral_img"

mkdir -p $jobs

for i in `seq 1 240`

do

job="$jobs/register-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=$dir -Dtimepoint=$i -Dangles=325,280,235 \

-- --no-splash ${jobs}/registration.bsh$

chmod a+x "$job"

doneRun it to create 240 registration-<number>.job bash scripts

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64

-Ddir=/projects/tomancak_lightsheet/Tassos -Dtimepoint=603

-Dangles=325,280,235,10,190 -- --no-splash

/projects/tomancak_lightsheet/Tassos/jobs/registration_integral_img/registration.bshwhich will run registration.bsh using Fiji

import ij.IJ;

import ij.ImagePlus;

import java.lang.Runtime;

import java.io.File;

import java.io.FilenameFilter;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

IJ.run("Bead-based registration", "select_type_of_registration=Single-channel" + " " +

"select_type_of_detection=[Difference-of-Mean (Integral image based)] " + " " +

"spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles_to_process=" + angles + " " +

"bead_brightness=[Advanced ...]" + " " +

"subpixel_localization=[3-dimensional quadratic fit (all detections)]" + " " +

"specify_calibration_manually xy_resolution=1.000 z_resolution=3.934431791305542" + " " +

"transformation_model=Affine" + " " +

"channel_0_radius_1=2" + " " +

"channel_0_radius_2=3" + " " +

"channel_0_threshold=0.0069"

);

/* shutdown */

runtime.exit(0);on a cluster node when submitted by submit-jobs

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 1 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

doneSome tips and tricks

- the bead based registration code is NOT multi-threaded, thus 1 processor is sufficient (

bsub -n 1) - the registration needs at least as much memory on the node to be able to simultaneously open all views (3x1.8GB here). Since our nodes have 128GB of shared memory it is not really an issue here, we can run registration using 12 cores on one machine at the same time.

- the crucial parameter for bead based registation is the

channel_0_threshold=0.0069; determine it on a local workstation using Fiji GUI. Clusters typically do not have graphical interface.

Time-lapse registration

Once the per-time-point registration is finished it is necessary to register all the time-points in the time-series to a reference time-point (to remove potential sample drift during imaging). The parameters for time series registration are described here.

The time-series registration is not really a cluster type of task as it is run on a single processor in a linear fashion. But since until now we have everything on the cluster filesystem it is useful to execute it here. Note: I do not mean that timelapse registration cannot be parallelized, we just have not implemented it because it runs fairly fast in the current, linear fashion.

It is a very bad idea to execute anything other then submitting jobs on a cluster head node. LSF offers a useful alternative - a special interactive queue allowing us to connect directly to a free node of the cluster and execute commands interactively.

bsub -q interactive -Is bash

Job <445547> is submitted to queue <interactive>.

<<Waiting for dispatch ...>>

<<Starting on n27>>We are now on node 27 and can use the filesystem as if we were on the head node (not every queuing system will enable this).

We create a bash script timelapse.interactive

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=/projects/tomancak_lightsheet/Tassos/ -Dtimepoint=1-240 \

-Dangles=325,280,235 \

-- --no-splash ./time-lapse.bshIt calls time-lapse.bsh that will run fiji with the appropriate parameters for timelapse registration plug-in

import ij.IJ;

import java.lang.Runtime;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

IJ.run("Bead-based registration", "select_type_of_registration=Single-channel" + " " +

"select_type_of_detection=[Difference-of-Mean (Integral image based)]" + " " +

"spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles_to_process=" + angles + " " +

"load_segmented_beads" + " " +

"subpixel_localization=[3-dimensional quadratic fit (all detections)]" + " " +

"specify_calibration_manually xy_resolution=1.000 z_resolution=3.934431791305542" + " " +

"transformation_model=Affine" + " " +

"channel_0_radius_1=2" + " " +

"channel_0_radius_2=3" + " " +

"channel_0_threshold=0.0098" + " " +

"re-use_per_timepoint_registration" + " " +

"timelapse_registration" + " " +

"select_reference=[Manually (specify)]" + " " +

"reference_timepoint=709"

);

/* shutdown */

runtime.exit(0);Executing the timelapse.interactive

./timelapse.interactivewill start a long stream of timelapse registration output. Its a good idea to redirect it to a file like this:

./timelapse.interactive > timelapse_reg.outWe can just as well run the timelapse registration from the head node by issuing

bsub -q short -n 1 -R span[hosts=1] -o "out.%J" -e "err.%J" ./timelapse.interactiveIn this case the output will go into out.<job_number> file in the working directory.

Tips and tricks

- The crucial parameter of timelapse registration is

reference_timepoint=709. It could be either a timepoint with low registration error or a timepoint in the middle of the time series. - It is important to specify the

z_resolutionintimelapse.bsh(specify_calibration_manually xy_resolution=1.000 z_resolution=3.934431791305542), otherwise the plugin will open every raw data file to read the metadata which can take quite long. - the

xy_resolutioncan be set to 1 since the plugin only uses the ratio between xy and z - For very long time-series where the sample potentially jumps in the field of view it may be necessary to register several segments of the series separately.

Fusion

In multi-view SPIM imaging fusion means combination of registered views into a single output image. Fiji currently implements two distinct fusion strategies: content based fusion and multi-view deconvolution. For detailed overview see SPIM registration page.

Content based multiview fusion

After registration we need to combine the views into a single output image. The content based fusion algorithm in Fiji solves that problem by evaluating local image entropy and weighing differentially the information in areas where several views overlap. For details see here.

As before we create a directory jobs/fusion and in there bash script create-fusion-jobs

#!/bin/bash

dir="/projects/tomancak_lightsheet/Tassos/"

jobs="$dir/jobs"

mkdir -p $jobs

for i in `seq 1 240`

do

job="$jobs/fusion/fusion-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Xms100g -Xmx100g -Ddir=$dir -Dtimepoint=$i -Dangles=325,280,235 \

-Dreference=120 -Dx=0 -Dy=0 -Dz=0 -Dw=1936 -Dh=1860 -Dd=1868 \

-- --no-splash /projects/tomancak_lightsheet/Tassos/jobs/fusion/fusion.bsh" \

>> "$job"

chmod a+x "$job"

donethat will generate many fusion-<number>.job scripts

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 -Xms100g -Xmx100g

-Ddir=/projects/tomancak_lightsheet/Tassos/ -Dtimepoint=10 -Dangles=325,280,235

-Dreference=120 -Dx=0 -Dy=0 -Dz=0 -Dw=1936 -Dh=1860 -Dd=1868 -- --no-splash

/projects/tomancak_lightsheet/Tassos/jobs/fusion/fusion.bshEach of these will run fusion.bsh

import ij.IJ;

import java.lang.Runtime;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

reference = System.getProperty( "reference" );

x = System.getProperty( "x" );

y = System.getProperty( "y" );

z = System.getProperty( "z" );

w = System.getProperty( "w" );

h = System.getProperty( "h" );

d = System.getProperty( "d" );

iter = System.getProperty( "iter" );

IJ.run("Multi-view fusion", "select_channel=Single-channel" + " " +

"spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}.tif timepoints_to_process=" + timepoint + " " +

"angles=" + angles + " " +

"fusion_method=[Fuse into a single image]" + " " +

"process_views_in_paralell=All" + " " +

"blending" + " " +

"content_based_weights_(fast," + " " +

"downsample_output=4" + " " +

"registration=[Time-point registration (reference=" + reference + ") of channel 0]" + " " +

"crop_output_image_offset_x=" + x + " " +

"crop_output_image_offset_y=" + y + " " +

"crop_output_image_offset_z=" + z + " " +

"crop_output_image_size_x=" + w + " " +

"crop_output_image_size_y=" + h + " " +

"crop_output_image_size_z=" + d + " " +

"fused_image_output=[Save 2d-slices, one directory per time-point]");

/* shutdown */

runtime.exit(0);on a cluster node when submitted by submit-jobs

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 12 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

doneTips and tricks:

- Fusion is memory intensive no matter what.

- The content based fusion will necessarily degrade image quality. Thus it makes only sense to fuse the image for visualization purposes such as 3D rendering.

- It is not necessary or even possible to 3D render the full resolution data. Thus we use the

downsample_output=4option to make it 4 times smaller. - The downsampling also reduces the storage requirements for the fused data which can be unrealistic for full resolution data (tens of terabytes).

- The fusion code is multi-threaded, therefore we request 12 processors on one host

bsub -n 12 -R span[hosts=1]and request as much memory as possiblefiji-linux64 -Xms100g -Xmx100g. Requesting 12 hosts guarantees all the memory on a single node is available for the job (128GB). It may be difficult to get that when others are running small, single processor jobs on the cluster. - The integral image mediated weightening is much faster than the traditional gauss method, for large images it may be the only option as one can also run out of 128GB of RAM with this data.

Multiview deconvolution

Another, more advanced, way to fuse the registered data is multiview deconvolution which is described here.

The deconvolution can be executed either on the CPU (Central Processing Unit - i.e. the main processor of the computer) or on GPU (Graphical Processing Unit - i.e. the graphics card). The pre-requisite for the GPU processing is to have one or more graphics cards capable of CUDA such as NVIDIA Tesla or Quadro or GeForce. Since the GPU accelerated multi-view deconvolution is not yet published and the necessary C code has to be obtained from Stephan Preibisch by request we will focus for now on deconvolution using CPU.

The GPU mediated deconvolution is faster, but currently only by a factor of 2-3 and so the CPU version makes sense, especially when you have a big cluster of CPUs and no or few GPUs.

Multiview deconvolution on CPU

In contrast to the multiview fusion plugin described above, Stephan Preibisch, in his infinite wisdom ;-), did not implement the option to scale down the data before deconvolution starts. Since deconvolution is a very expensive operation, it will take a very long time (hours) on full resolution data. If the sole purpose of fusing the data by deconvolution is to render them in 3D, the full resolution images are not necessary, ergo we need to downsample. Fortunately Stephan implemented a workaround in the form of a script that prepends a transformation (such as scaling) to the raw SPIM data.

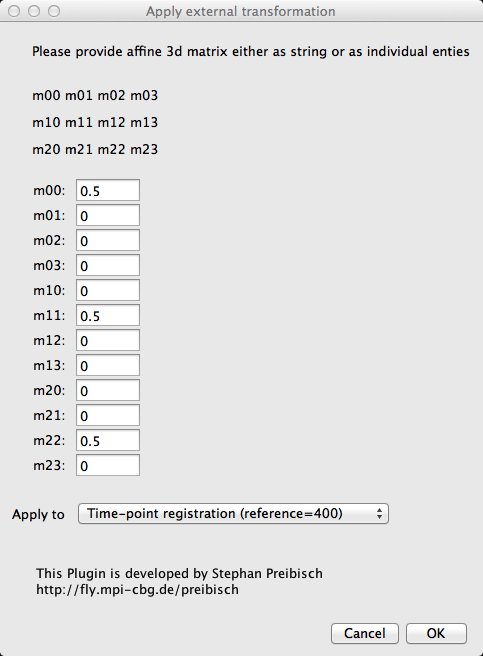

The script can be found under Plugins › SPIM registration › Utilities › Apply external transformation (or press L and type Apply external transformation). The initial dialog is reminiscent of SPIM registration, the screen that comes after that is not.

What we are looking at is the so called Affine Transformation Matrix that will be pre-concatenated to the transformation matrix in the registration files from bead based registration. The m00, m11 and m22 entries of the matrix represent the scaling of the image and so by setting all three of them to 0.5 we will downscale the image by a factor of 2.

The output of running the Apply external transformation will look like this:

Pre-concatenating model:

3d-affine: (0.5, 0.0, 0.0, 0.0, 0.0, 0.5, 0.0, 0.0, 0.0, 0.0, 0.5, 0.0)

Applying model to: spim_TL400_Angle1.tif.registration.to_400

Applying model to: spim_TL400_Angle2.tif.registration.to_400

Applying model to: spim_TL400_Angle3.tif.registration.to_400

Applying model to: spim_TL400_Angle4.tif.registration.to_400

Applying model to: spim_TL400_Angle5.tif.registration.to_400

Applying model to: spim_TL400_Angle6.tif.registration.to_400

and the registration files spim_TL400_Angle1.tif.registration.to_400 in the registration/ directory will now end with something like this:

Previous model: (0.996656, 0.0014411004, 0.003138572, -5.4490294, -0.0019162297, 0.9955152, -8.5441023E-4, -81.664116, -5.4249633E-4, -2.910602E-4, 0.9978538, 10.278229)

Pre-concatenated model: (0.5, 0.0, 0.0, 0.0, 0.0, 0.5, 0.0, 0.0, 0.0, 0.0, 0.5, 0.0)

Now this looks elegant, but there are several caveats. The pre-concatenation of transformation models is not reversible (or at least not easily in the current code framework) and so before applying external transformation we recommend to archive the old, unmodified registration files. For example by packaging them to a tar archive

tar -cvf all_to_400_regs.tar *.to_400and decompressing in order to get to the original, unaltered transformation models

tar -xvf all_to_400_regs.tarSecond issue, AND IMPORTANT ONE. The new transformation (scaling) must be applied to every timepoint in the registered time-series INCLUDING the reference time-point. For good measure, it is also necessary to apply the transformation to the original non-time-series .registration files of the reference time-point ONLY. Don’t ask me why… These two steps (pre-concatenating transformation models to reference time point just once) are really not clusterizable and so we recommend to do them manually in Fiji on a local machine and copy the modified registration files to the registration/ directory on the cluster. Yes, it is clunky, but its better than nothing.

Now we are ready for the cluster mediated deconvolution on the downscaled data. By now you should know the drill… Create a directory jobs/deconvolution and in there a bash script create-deconvolution-jobs

#!/bin/bash

dir="/projects/tomancak_lightsheet/Tassos"

jobs="$dir/jobs/deconvolution"

mkdir -p $jobs

for i in `seq 1 240`

do

job="$jobs/deconvolution-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Xms100g -Xmx100g -Ddir=$dir -Dtimepoint=$i -Dangles=1-6 -Dreference=714 \

-Dx=36 -Dy=168 -Dz=282 -Dw=1824 -Dh=834 -Dd=810 -Diter=10 \

-- --no-splash /projects/tomancak_lightsheet/Tassos/jobs/deconvolution/deconvolution.bsh" \

>> "$job"

chmod a+x "$job"

donethat will generate many deconvolution-<number>.job scripts

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 -Xms100g -Xmx100g

-Ddir=/projects/tomancak_lightsheet/Valia/Valia -Dtimepoint=1 -Dangles=1-6 -Dreference=714

-Dx=36 -Dy=168 -Dz=282 -Dw=1824 -Dh=834 -Dd=810 -Diter=10 -- --no-splash

/projects/tomancak_lightsheet/Tassos/jobs/deconvolution/deconvolution.bshNote the new parameter iter which specifies how many iterations of the multiview deconvolution we want to run. This should be determined empirically on a local GUI Fiji set-up.

Each of the *deconvolution-

import ij.IJ;

import java.lang.Runtime;

fiji.plugin.Multi_View_Deconvolution.psfSize = 31;

fiji.plugin.Multi_View_Deconvolution.isotropic = true;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

reference = System.getProperty( "reference" );

x = System.getProperty( "x" );

y = System.getProperty( "y" );

z = System.getProperty( "z" );

w = System.getProperty( "w" );

h = System.getProperty( "h" );

d = System.getProperty( "d" );

iter = System.getProperty( "iter" );

//First we pre-concatenate a transformation matrix that downscales the image by a factor of 2,

//comment the following macro command out if you want to deconvolve full resolution data.

IJ.run("Apply external transformation",

"spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles=" + angles + " " +

"how_to_provide_affine_matrix=[As individual entries]" + " " +

"m00=0.5 m01=0 m02=0 m03=0 m10=0 m11=0.5 m12=0 m13=0 m20=0 m21=0 m22=0.5 m23=0" + " " +

"apply_to=[Time-point registration (reference=" + reference + ")]");

IJ.run("Multi-view deconvolution", "spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles=" + angles + " " +

"registration=[Time-point registration (reference=" + reference + ") of channel 0]" + " " +

"crop_output_image_offset_x=" + x + " " +

"crop_output_image_offset_y=" + y + " " +

"crop_output_image_offset_z=" + z + " " +

"crop_output_image_size_x=" + w + " " +

"crop_output_image_size_y=" + h + " " +

"crop_output_image_size_z=" + d + " " +

"type_of_iteration=[Conditional Probability (fast, precise)]" + " " +

"number_of_iterations=" + iter + " " +

"use_tikhonov_regularization tikhonov_parameter=0.0060" + " " +

"imglib_container=[Array container]" + " " +

"compute=[in 512x512x512 blocks]" + " " +

// "blocksize_x=1024 blocksize_y=1024 blocksize_z=1024" + " " +

"compute_on=[CPU (Java)]" + " " +

"fused_image_output=[Save 2d-slices, one directory per time-point]"

);

/* shutdown */

runtime.exit(0);Stuff that matters here are the following parameters:

number_of_iterations=10specifies the number of iterations (10 is a good guess)compute_on=[CPU (Java)]here we indicate that we want to use CPUcompute=[in 512x512x512 blocks]most likely we will have to compute in blocks unless we have really a lot of memory available.fiji.plugin.Multi_View_Deconvolution.psfSize = 31;this parameter should be considered advanced for now, it specifies the size of the area used to extract the Point Spread Function (PSF) from the beads in the image. Default is 19.

otherwise the parameters are similar to content based fusion or constants.

on a cluster node when submitted by submit-jobs

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q medium -n 12 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

doneTips and tricks:

- Multiview deconvolution needs as much memory as possible.

- The memory requirements can be mitigated by using smaller blocks and the processing will take longer.

- The output deconvolved image will have extremely compressed dynamic range, i.e. will look pitch black upon opening. Set the min and max to 0.0 and 0.05 to see anything.

- The PSFs of the beads will become smaller (ideally points) but brighter.

- The image will appear much more noisy compared to content fused or raw data.

- The deconvolution.bsh script by default downscales the images before deconvolution commences. If you want to do that do not forget to first downscale manually the reference time-point (as described - both the original and the timelapse registration versions), use it to define the crop area on a local machine and transfer the

.registrationand.registration.to_<reference timepoint>files FOR THE REFERENCE TIME-POINT to the cluster. - In fact it is best to perform the entire deconvolution process of the reference time-point locally and transfer the results to the cluster. First of all its good to experiment with the number of iterations and to look at what the deconvolution does to the data. Second, since on the cluster we are applying the downscaling to ALL the time-points - this includes the reference to which we applied the transformation on our local machine (see tip above). Therefore the reference time-point ends up downscaled twice. If you don’t get it - call me ;-).

- In order to deconvolve full resolution data, no need to do the previous step however the pre-concatenation macro MUST BE commented out in the deconvolution script. Otherwise things will get really weird!

Multiview deconvolution on GPU

Coming soon.

3D rendering

Finally we want generate a beautiful 3D rendering of the downsampled, fused data and run it as movies at conferences… ;-).

The preparation phase of 3D rendering is a bit more complicated. We will use the interactive Stack Rotation plugin to position the specimen the way we want to render it and then send it to 3DViewer plugin. Here is the recipe:

-

Open fused image stack and launch Interactive Stack Rotation plugin (Plugins › Transform › Interactive Stack Rotation). Note: Familiarize yourself with the keystrokes that navigate the Interactive Stack Rotation. This is an extremely powerful way of looking at nearly isotropic 3D data coming from SPIM. More advanced version honoring these keystroke conventions is coming to Fiji soon (by Tobias Pietzsch).

-

Use the key commands to rotate the specimen into the position from which you want to 3D render it. Note that the top slice with the lower z-index will be facing towards you when rendering in 3d Viewer.

-

Record the transform by pressing E. The transformation matrix will appear in the Fiji log window.

-

Copy the transform into the

render.bshscript shown below into line 41 (read the comments if unsure). -

Press ↵ Enter to apply the transformation to the stack.

-

Now use the rectangle tool to define a crop area that will include the specimen with minimal background. Write down the x,ycoordinates width and height of the crop area and paste them into the

render.bshscript (line 128). Note: A more efficient way to capture the numbers is to start macro record before and simply copy and paste them from the macro recorder window. -

Apply crop (Image › Crop).

-

Determine the z-index where the specimen starts and ends and paste them into the render.bsh script (line 131).

-

Run Duplicate command (Image › Duplicate) and enter the z-index as range (for example 20-200). A tightly cropped specimen stack should be the result of this series of operations.

-

Adjust brightness and contrast on the stack to see the data well, perhaps slightly saturating and write the min and max into the render.bsh script (line 31).

-

Launch the 3d Viewer and experiment with threshold (3d Viewer then Edit › Adjust Threshold) and transparency (3DViewer then Edit › Change Transparency) and enter them into the render.bsh script (lines 154 and 156).

-

Finally modify the dimensions of the Snapshot that the 3D VIewer takes to match the dimensions of the crop area (width and height) on line 161.

We are ready to begin the cluster processing by creating our old friend, the create-render-job bash script in a directory jobs/3d_rendering

#!/bin/bash

dir="/projects/tomancak_lightsheet/Tassos/"

jobs="$dir/jobs"

mkdir -p $jobs

for i in `seq 1 241`

do

job="$jobs/3d_rendering/render-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -as\"-screen 0 1280x1024x24\" \

/sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Xms20g -Xmx20g -Ddir=$dir -Dtimepoint=$i -Dangle=1 \

-- --no-splash $dir/jobs/3d_rendering/render.bsh" >> "$job"

chmod a+x "$job"

donewho will create render-<number>.job

#!/bin/bash

xvfb-run -as"-screen 0 1280x1024x24" /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 -Xms20g -Xmx20g -Ddir=/projects/tomancak_lightsheet/Tassos \

-Dtimepoint=1 -Dangle=1 -- --no-splash /projects/tomancak_lightsheet/Tassos/jobs/3d_rendering/render.bshEach bash script is passing a directory, timepoint and rendering angle parameters to render.bsh BeanShell script. The script is little more complicated than before. It combines Saalfeld’s BeanShell magic with my clumsy macro programming. It is necessary to change the parameters inside the script according to the recipe above for each individual rendering run.

import java.lang.Runtime;

import ij.ImagePlus;

import ij.ImageStack;

import ij.process.ImageProcessor;

import ij.IJ;

import ij.measure.Calibration;

import mpicbg.ij.stack.InverseTransformMapping;

import mpicbg.models.TranslationModel3D;

import mpicbg.models.AffineModel3D;

import mpicbg.models.InverseCoordinateTransformList;

runtime = Runtime.getRuntime();

dir = System.getProperty("dir");

timepoint = System.getProperty( "timepoint" );

angle = System.getProperty("angle");

System.out.println("Opening");

System.out.println(dir);

System.out.println(timepoint);

/*MACRO*/

/* opening the fused image stack*/

IJ.run("Image Sequence...", "open=" + dir + "/output_fusion/" + " " +

"number=809 starting=1 increment=1 scale=100" + " " +

"file=img_tl" + timepoint + "_" + " " +

"or=[] sort");

/* !!!!!!!!!! Adjust the min and max of the input image !!!!!!!!!! */

ij.IJ.setMinAndMax(200, 600);

/* down scale if necessary with macro */

System.out.println("Scaling");

IJ.run("Scale...", "x=0.5 y=0.5 z=0.5 depth=405 interpolation=Bilinear average process create title=scaled");

System.out.println("Rotating");

/* !!!!!!!!!! paste the 3d transform matrix from Interactive Stack Rotation here. Remove any text, only numbers that must begin and end with curly brackets !!!!!!!!!! */

am = new float[]{

0.97536975, -0.14100166, 0.16962992, 0.0, -0.18503368, -0.9416376, 0.28122446, 0.0, 0.12007594, -0.30568472, -0.9445343, 0.0};

imp = IJ.getImage();

System.out.println(imp);

/* un-scale */

c = imp.getCalibration();

zFactor = (float)(c.pixelDepth / c.pixelWidth);

unScale = new AffineModel3D();

unScale.set(

1.0f, 0.0f, 0.0f, 0.0f,

0.0f, 1.0f, 0.0f, 0.0f,

0.0f, 0.0f, zFactor, 0.0f);

/* center shift */

centerShift = new TranslationModel3D();

centerShift.set(-imp.getWidth() / 2, -imp.getHeight() / 2, -imp.getStack().getSize() / 2 * zFactor);

/* center un-shift */

centerUnShift = new TranslationModel3D();

centerUnShift.set(imp.getWidth() / 2, imp.getHeight() / 2, imp.getStack().getSize() / 2 * zFactor);

/* rotation */

rotation = new AffineModel3D();

rotation.set(

am[0], am[1], am[2], am[3],

am[4], am[5], am[6], am[7],

am[8], am[9], am[10], am[11]);

transform = new AffineModel3D();

transform.preConcatenate(unScale);

transform.preConcatenate(centerShift);

transform.preConcatenate(rotation);

transform.preConcatenate(centerUnShift);

/* bounding volume */

min = new float[]{0, 0, 0};

max = new float[]{imp.getWidth(), imp.getHeight(), imp.getStack().getSize()};

transform.estimateBounds(min, max);

w = (int)Math.ceil(max[0] - min[0]);

h = (int)Math.ceil(max[1] - min[1]);

d = (int)Math.ceil(max[2] - min[2]);

/* bounding volume offset */

minShift = new TranslationModel3D();

minShift.set(-min[0], -min[1], -min[2]);

transform.preConcatenate(minShift);

/* render target stack */

mapping = new InverseTransformMapping(transform);

ip = imp.getStack().getProcessor(1).createProcessor(imp.getWidth(), imp.getHeight());

System.out.println(ip);

targetStack = new ImageStack(w, h);

for (s = 0; s < d; ++s) {

ip = ip.createProcessor(w, h);

mapping.setSlice(s);

try {

mapping.mapInterpolated(imp.getStack(), ip);

}

catch (Exception e) {

e.printStackTrace();

}

targetStack.addSlice("", ip);

}

/* set proper calibration (it's isotropic at the former x,y-scale now) */

impTarget = new ImagePlus("target", targetStack);

System.out.println(impTarget);

calibration = imp.getCalibration().copy();

calibration.pixelDepth = calibration.pixelWidth;

impTarget.setCalibration(calibration);

impTarget.show();

/* MACRO */

System.out.println("Cropping");

IJ.run("Select Bounding Box");

/* !!!!!!!!!! Enter the x,y, width and height of the crop area. When working with up/downsampled images multiply or divide the numbers accordingly !!!!!!!!!! */

ij.IJ.makeRectangle(24, 170, 960, 362);

IJ.run("Crop");

/* !!!!!!!!!! Enter the z-index where the specimen starts and ends. When working with up/downsampled images multiply or divide the numbers accordingly !!!!!!!!!! */

IJ.run("Duplicate...", "duplicate range=146-480");

impdupl = IJ.getImage(); // catch the new window - who knows why....

impdupl.show();

IJ.run("8-bit");

IJ.run("Add...", "value=1 stack"); // because 3d viewer will re-scale the bounding box

System.out.println("Rendering");

IJ.run("3D Viewer");

ij.IJ.wait(1000);

ij3d.ImageJ3DViewer.setCoordinateSystem("false");

ij3d.ImageJ3DViewer.add("target-1", "None", "target-1", "0", "true", "true", "true", "1", "0");

ij.IJ.wait(1000);

ij3d.ImageJ3DViewer.select("target-1");

ij.IJ.wait(1000);

ij3d.ImageJ3DViewer.setTransform("1.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0 1.0 -1050.0 0.0 0.0 0.0 1.0");

ij.IJ.wait(5000);

/* !!!!!!!!!! Enter the 3D Viewer threshold !!!!!!!!!! */

ij3d.ImageJ3DViewer.setThreshold("50");

/* !!!!!!!!!! Enter the 3D Viewer transparency !!!!!!!!!! */

ij3d.ImageJ3DViewer.setTransparency("0.15");

ij.IJ.wait(5000);

ij3d.ImageJ3DViewer.select("Dummy");

/* !!!!!!!!!! Enter the width and height of the crop area. !!!!!!!!!! */

ij3d.ImageJ3DViewer.snapshot("961", "356");

IJ.selectWindow("Snapshot");

IJ.saveAs("Tiff", dir + "/rendering/render_tp" + timepoint + "_Angle" + angle + ".tif");

/* shutdown */

runtime.exit(0);We submit is as usual using ./submit-jobs .

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 1 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

doneTips and tricks

- This approach to making 3D rendering movies is still a hack, although its better than pure macro and TransformJ. We are working on a better solution.

- We are using an interplay of Interactive Stack Rotation to pre-process the image by rotating it to the desired position and then calling 3dVIewer to render it at that position while zooming in a bit.

- You can also use the TransformJ commands to rotate your fused stack to the orientation you like, possibly crop it if its too big and then open it in 3dViewer. Recording this as a macro and making it work (ala Stephan Preibisch) is possible, but it is incredibly laborious and nerve wracking.

- Whoever wants to rewrite the macro parts of

render.bshinto a real script is VERY welcome. - Even better would be, obviously, to pass the transformation matrix to the 3D Viewer, but it proved unreliable.

- The key to making it work on a cluster is to provide specific parameters about screen size to the

xvfb-runscript (-as"-screen 0 1280x1024x24"). Otherwise it doesn’t work. Thanks to Stephan Saalfeld for figuring it out. - The cluster makes it fairly easy to experiment with parameters and angles of view - on a single computer the same task would take days and since we are using ImageJ macro you would not be able to touch the computer. MPI-CBG cluster renders 800+ timepoints in half an hour even under full load from other users.

The 3D rendering is relatively complex (we are working on a simpler solution) but extremely rewarding. Drosophila embryogenesis movie coming soon here.

Processing 2 channels

This part will deal with the processing of SPIM data with 2 channels. The registration and fusion works very similar and needs only a few adjustments to the scripts above, which I will point out specifically. There are 2 main differences:

-

The Zeiss SPIM currently does not allow to export individual channels when acquiring 2 channels in fast frame mode. Thus we need to split the channels and save them as separate .tif files. When using the sequential mode you can skip this step.

-

In our case only 1 channel will have beads visible. Thus we will perform the registration only on this channel. The fusion program however, requires that registration files are present for both channels. To work around that, we will just duplicate the registration files from the channel that contains the beads.

Separating the channels

Rename and save the data as .tif following the steps described above. The data should be present now in the following format:

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1

ls

spim_TL1_Angle32.tif

spim_TL1_Angle104.tif

spim_TL1_Angle176.tif

spim_TL1_Angle248.tif

spim_TL1_Angle320.tif

...Each file contains 2 channels, which we need to split into individual files before we can proceed. We will save the individual channels into a new subdirectory:

mkdir splitTo separate the channels we create a bash script create-split-jobs. Save this script in a new subdirectory in the jobs directory.

mkdir jobs/split_channels

cd jobs/split_channelsThe creat-split-jobs will create the jobs that will be send to the cluster. You will need to edit the directory, the number of time points and the angles.

#!/bin/bash

#path of the directory where to find the files

dir="/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/"

#path where to find the job script

jobs="$dir/jobs/split_channels"

mkdir -p $jobs

#modify the number of time points

for i in `seq 1 72`

do

#modify the angles

for a in 32 104 176 248 320

do

job="$jobs/split-$i-$a.job"

echo $job

echo "#!/bin/bash" > "$job"

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=$dir -Dtimepoint=$i -Dangle=$a \

-- --no-splash ${jobs}/split.bsh" >> "$job"

chmod a+x "$job"

done

doneRun this script:

./create-split-jobsThe creat-split-jobs will use the split.bsh (written by Stephan Saalfeld). This script will split each file into individual channels and will save them into the directory split.

import ij.IJ;

import ij.ImagePlus;

import ij.ImageStack;

import java.lang.Runtime;

import java.io.File;

import java.io.FilenameFilter;

runtime = Runtime.getRuntime();

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angle = System.getProperty( "angle" );

//open image

System.out.println( dir );

imp = new ImagePlus( dir + "spim_TL" + timepoint + "_Angle" + angle + ".tif" );

System.out.println( imp.getTitle() );

/* split channels */

stack = imp.getStack();

for ( c = 0; c < imp.getNChannels(); ++c )

{

channelStack = new ImageStack( imp.getWidth(), imp.getHeight() );

for ( z = 0; z < imp.getNSlices(); ++z )

channelStack.addSlice(

"",

stack.getProcessor(

imp.getStackIndex( c + 1, z + 1, 1 ) ) );

impc = new ImagePlus( imp.getTitle() + " #" + ( c + 1 ), channelStack );

/*Save individual files in the directory /split*/

IJ.save( impc, dir + "/split/" + imp.getTitle().replaceFirst( ".tif$", "_Channel" + ( c ) + ".tif" ) );

}

/* shutdown */

runtime.exit(0);Using the script “submit-jobs” will send the jobs to the cluster.

It pays of to do a test run with a small set. You can then determine the rough runtime and memory requirements of the jobs by looking at the output files. This information will allow the queuing system to put you in faster and eliminates failed jobs. The splitting will be rather fast 1-2 min and will require very little memory.

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 1 -W 00:05 -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

done- “-W 00:05” will limit the wall time of the job to 5 minutes.

Run the script by:

./submit-jobs .Finally you should find the individual channels in the directory split. To the information of the time point and the angle, the channel information is added.

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split

ls

spim_TL1_Angle32_Channel0.tif

spim_TL1_Angle32_Channel1.tif

spim_TL1_Angle104_Channel0.tif

spim_TL1_Angle104_Channel1.tif

spim_TL1_Angle176_Channel0.tif

spim_TL1_Angle176_Channel1.tif

spim_TL1_Angle248_Channel0.tif

spim_TL1_Angle248_Channel1.tif

spim_TL1_Angle320_Channel0.tif

spim_TL1_Angle320_Channel1.tif

...You can now remove the .tif files that contain both channels. Determine which channel contains the beads. The multi-view registration and the time-lapse registration will be performed only on this channel.

Multi-view registration for 2 channels

In this example the beads are visible in channel 1. Therefore, we will proceed to register this channel. Modify in the create-registration-jobs script the directory, the number of time points and the angles (-Dangles).

#!/bin/bash

#modify directory

dir="/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/"

jobs="/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/jobs/registration"

mkdir -p $jobs

#Number of time points

for i in `seq 1 72`

do

job="$jobs/register-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

#modify -Dangles

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=$dir -Dtimepoint=$i -Dangles=32,104,176,248,320 \

-- --no-splash ${jobs}/registration.bsh" >> "$job"

chmod a+x "$job"

done

./create-registration-jobsThe registration.bsh stays in principle the same as in the single channel. The only thing you need to modify is pattern_of_spim otherwise the program will not recognise the files. Just add the name of the channel to the file name.

As before you would change the z-resolution, radius channel_0_radius_1, channel_0_radius_2 and the channel_0_threshold according to the parameters you would have determined manually.

import ij.IJ;

import ij.ImagePlus;

import java.lang.Runtime;

import java.io.File;

import java.io.FilenameFilter;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

IJ.run("Bead-based registration", "select_type_of_registration=Single-channel" + " " +

"select_type_of_detection=[Difference-of-Mean (Integral image based)] " + " " +

"spim_data_directory=" + dir + " " +

/*modify pattern of file*/

"pattern_of_spim=spim_TL{t}_Angle{a}_Channel1.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles_to_process=" + angles + " " +

// "load_segmented_beads" + " " +

"bead_brightness=[Advanced ...]" + " " +

"subpixel_localization=[3-dimensional quadratic fit (all detections)]" + " " +

"specify_calibration_manually xy_resolution=1.000 z_resolution=3.4972729682922363" + " " +

"transformation_model=Affine" + " " +

"channel_0_radius_1=3" + " " +

"channel_0_radius_2=5" + " " +

"channel_0_threshold=0.0070"

);

/* shutdown */

runtime.exit(0);The submit-jobs script is modified for the requirements of the registration. Determine these parameters with a small set before you apply them to all files.

-n 5use one processor per angle.-W 00:15Walltime of the job restricted to 15 min.-R rusage[mem=10000]10000MB of memory is required.

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 5 -W 00:15 -R rusage[mem=10000] -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

done

./submit-jobs .The registration files should now be written in the directory registration. For each angle of each time point 3 registration files should be present:

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/registration

ls

spim_TL1_Angle32_Channel1.tif.beads.txt

spim_TL1_Angle32_Channel1.tif.dim

spim_TL1_Angle32_Channel1.tif.registration

...Time-lapse registration for 2 channels

In the script timelapse.interactive modify -Ddir=, -Dtimepoint=, -Dreferencetp= (choose a good time point as reference) and -Dangles=.

#!/bin/bash

xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Ddir=/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/ \

-Dtimepoint=1-72 -Dreferencetp=1 -Dangles=32,104,176,248,320 \

-- --no-splash ./time-lapse.bshAnalog to the Multi-view registration add the channel information to the name of the file in the pattern_of_spim part of the script.

Give the z-resolution, channel_0_radius_1, channel_0_radius_2 and the channel_0_threshold as before.

import ij.IJ;

import java.lang.Runtime;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

referencetp = System.getProperty( "referencetp" );

IJ.run("Bead-based registration", "select_type_of_registration=Single-channel" + " " +

"select_type_of_detection=[Difference-of-Mean (Integral image based)]" + " " +

"spim_data_directory=" + dir + " " +

/*modify pattern_of_spim*/

"pattern_of_spim=spim_TL{t}_Angle{a}_Channel1.tif" + " " +

"timepoints_to_process=" + timepoint + " " +

"angles_to_process=" + angles + " " +

"load_segmented_beads" + " " +

"subpixel_localization=[3-dimensional quadratic fit (all detections)]" + " " +

"specify_calibration_manually xy_resolution=1.000 z_resolution=3.278693914413452" + " " +

"transformation_model=Affine" + " " +

"channel_0_radius_1=3" + " " +

"channel_0_radius_2=5" + " " +

"channel_0_threshold=0.007" + " " +

"re-use_per_timepoint_registration" + " " +

"timelapse_registration" + " " +

"select_reference=[Manually (specify)]" + " " +

"reference_timepoint=" + referencetp

);

/* shutdown */

runtime.exit(0);Again modify the submit-jobs script according to the need of your timelapse registration. For my example these modifications worked:

-W 0025-R rusage[mem=5000]

#!/bin/bash

bsub -q short -n 12 -W 00:25 -R rusage[mem=5000] -R span[hosts=1] -o "out.%J" -e "err.%J" ./timelapse.interactive

./submit-jobs .

An additional registration file will be created in the directory registration.

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/registration

ls

spim_TL1_Angle32_Channel1.tif.beads.txt

spim_TL1_Angle32_Channel1.tif.dim

spim_TL1_Angle32_Channel1.tif.registration

spim_TL1_Angle32_Channel1.tif.registration.to_1

...Duplicate registration files

Since the fusion requires the presents of registration files for both channel, we will duplicate the existing files of channel 1 and save them as registration files for channel 0. The following script duplicate_rename_registration.bsh will do just that. Create this script in the jobs directory.

You will need to modify the time points, angles, the used reference time point in registration.to_{your reference} and the directory.

The script will copy the existing files and save them under a new name with just the channel name changed.

#!/bin/bsh

# modify time points

for i in `seq 1 72`

do

#modify angles

for a in 32 104 176 248 320

do

#modify reference in time-lapse registration file ending

for end in "beads.txt" "dim" "registration" "registration.to_1"

do

# modify directories accordingly

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/registration/

cp ./spim_TL"$i"_Angle"$a"_Channel1.tif."$end" ./spim_TL"$i"_Angle"$a"_Channel0.tif."$end"

done

done

done

echo Duplication complete! Have a nice day!

exit 0Execute the script:

sh duplicate_rename_registration.bshNow for each channel of each angle and timepoint registration files should be present.

cd /projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split/registration

ls

spim_TL1_Angle32_Channel0.tif.beads.txt

spim_TL1_Angle32_Channel0.tif.dim

spim_TL1_Angle32_Channel0.tif.registration

spim_TL1_Angle32_Channel0.tif.registration.to_1

spim_TL1_Angle32_Channel1.tif.beads.txt

spim_TL1_Angle32_Channel1.tif.dim

spim_TL1_Angle32_Channel1.tif.registration

spim_TL1_Angle32_Channel1.tif.registration.to_1

...Fusion for 2 channels

The create_fusion_jobs for 2 channels works the same as for the single channel fusion. Just modify the directory, the number of time points, the angles under -Dangles and choose a cropping area (-Dx, -Dy, -Dz, -Dw, -Dh, -Dd).

#!/bin/bash

#modify directory

dir="/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/split"

jobs="/projects/tomancak_lightsheet/Christopher/29072013_HisRuby_Stock1/jobs/fusion"

mkdir -p $jobs

# modify number of timepoints

for i in `seq 1 72`

do

job="$jobs/fusion-$i.job"

echo $job

echo "#!/bin/bash" > "$job"

#modify -Dangles and cropping area

echo "xvfb-run -a /sw/people/tomancak/packages/Fiji.app/ImageJ-linux64 \

-Xms100g -Xmx100g -Ddir=$dir -Dtimepoint=$i \

-Dangles=32,104,176,248,320 -Dchannels=0,1 -Dreference=0 \

-Dx=68 -Dy=250 -Dz=404 -Dw=1746 -Dh=846 -Dd=654 \

-- --no-splash $jobs/fusion.bsh" >> "$job"

chmod a+x "$job"

doneExecute script

./create-fusion-jobsThe fusion.bsh script needs to be set for multi channel registration. Under select_channel, Multi-channel registration=[Individual registration of channel 1] registration=[Individual registration of channel 1] will be set. downsample_output in this case is set to 4.

import ij.IJ;

import java.lang.Runtime;

runtime = Runtime.getRuntime();

System.out.println(runtime.availableProcessors() + " cores available for multi-threading");

dir = System.getProperty( "dir" );

timepoint = System.getProperty( "timepoint" );

angles = System.getProperty( "angles" );

channels = System.getProperty( "channels");

reference = System.getProperty( "reference" );

x = System.getProperty( "x" );

y = System.getProperty( "y" );

z = System.getProperty( "z" );

w = System.getProperty( "w" );

h = System.getProperty( "h" );

d = System.getProperty( "d" );

iter = System.getProperty( "iter" );

IJ.run("Multi-view fusion", "select_channel=Multi-channel registration=[Individual registration of channel 1] registration=[Individual registration of channel 1]" + " " +

"spim_data_directory=" + dir + " " +

"pattern_of_spim=spim_TL{t}_Angle{a}_Channel{c}.tif timepoints_to_process=" + timepoint + " " +

"angles=" + angles + " " +

"channels=" + channels + " " +

"fusion_method=[Fuse into a single image]" + " " +

"process_views_in_paralell=All" + " " +

"blending" + " " +

"content_based_weights_(fast," + " " +

"downsample_output=4" + " " +

"registration=[Individual registration of channel 0]" + " " +

"crop_output_image_offset_x=" + x + " " +

"crop_output_image_offset_y=" + y + " " +

"crop_output_image_offset_z=" + z + " " +

"crop_output_image_size_x=" + w + " " +

"crop_output_image_size_y=" + h + " " +

"crop_output_image_size_z=" + d + " " +

"fused_image_output=[Save 2d-slices, one directory per time-point]");

/* shutdown */

runtime.exit(0);The submit-jobs script is modified according to the requirements of the fusion:

-W 00:20-R rusage[mem=50000]

Worked in my example, but again I would recommend that you modify this with the information from a small set of your own data.

#!/bin/bash

for file in `ls ${1} | grep ".job$"`

do

bsub -q short -n 12 -W 00:20 -R rusage[mem=50000] -R span[hosts=1] -o "out.%J" -e "err.%J" ${1}/$file

done

./submit-jobs .The fused images will be saved into separate subdirectories for each time point into the output directory.

NEW PIPELINE

The new pipeline is centered around a configuration file, the master file, that contains all the relevant processing parameters. It increases the efficiency of the processing significantly since mainly this file is manipulated for each dataset, instead of the scripts in each processing step individually.

The master file has two parts. The first part contains all the relevant processing parameters for each individual processing step. The second part contains some more advanced settings and the links for the job scripts and directories.

The new pipeline also comes with a new set of scripts that are specifically modified to be used with the master file. The general idea is to have these job scripts together with the master file independent from the dataset. The scripts will use the master file as a source for the processing parameters. The jobs will be created and executed within the job directories just as before, the success of the jobs can be assessed with the output and the error files. The master file can be saved and can serve as a documentation for the processing.

Currently the master file is useable for the following steps.

- Single-channel processing:

- Rename

.czifiles - Resave

.czifiles - Resave

.ome.tifffiles - Multi-view registration

- Timelapse registration

- Content based multi-view fusion

- External transformation

- Multi-view deconvolution

- 3D-rendering

- Export to hdf5 format

- Rename

- Multi-channel processing:

- Rename

.czifiles - Resave

.czifiles - Registration

- Timelapse registration

- Content based multi-view fusion

- 3D-rendering for 2 channels

- Export to hdf5 format

- Rename

All the scripts work with padded zeros.

At the moment this tutorial is written for advanced users that already used the previous pipeline. For a more detailed introduction please read into the description of the previous pipeline.

Master file

There are two parts in this file:

-

Processing Parameters

-

Directories for scripts and advanced settings for processing

The first part contains everything relevant for processing and will be modified for each dataset. It is further structured according to each processing step.

The second part contains the links for the working directories and scripts. Since the jobs scripts should rest at one particular location these links need to be changed the first time you start processing. This part also contains more advanced settings for registration, fusion and deconvolution. Which should only be touched when fully understanding these steps.

We will discuss each section of this file with the associated processing step.

#!/bin/bash

####============================= Processing Parameters ==============================

####--------------------------------- General Parameters ---------------------------------

###Data directory

dir="/projects/tomancak_lightsheet/Christopher/Test_scripts/single-channel/"

###Dataset core parameters

timepoint="`seq 1 3`"

angles="1,2,3"

num_angles="3"

###pattern of spim data:

pattern_of_spim="spim_TL{tt}_Angle{a}.tif"

#pattern_of_spim="spim_TL{tt}_Angle{a}_Channel{c}.tif"

##change pattern for single-channel: (e.g. spim_TL{tt}_Angle{a}.tif)

##or multi-channel processing: (e.g. spim_TL{tt}_Angle{a}_Channel{c}.tif)

##for padded zeros use tt as place holder

###Timelapse registration

referencetp="1"

###Manual calibration

xy_resolution="1"

z_scaling="3.2643520832"

###---------- Renaming .czi files ----------

first_index="0"

last_index="8"

first_timepoint="1"

angles_renaming=(1 2 3)

##For padded zero 2 = 01; 3 = 001

pad_rename_czi="2"

##Change directory and pattern

source_pattern=/2013-11-14_His-YFP\(\{index\}\).czi

target_pattern=/spim_TL\{timepoint\}_Angle\{angle\}.czi

###---------- Resaving .czi or ome.tiff as .tif ----------

##use ometiff_resave for ome.tiff and czi_resave for .czi

angle_resaving="1 2 3"

##For padded zero 2 = 01; 3 = 001

pad_resave="2"

###---------- Split Channels (Only for multi-channel data) ----------

##Outputs channls as spim_TL{tt}_Angle{a}_Channel0,spim_TL{tt}_Angle{a}_Channel1 ...

angles_split="1 2 3 4 5"

##Target directory

target_split="/channel_split/"

###---------- Multi-view registration (Difference of mean or Difference of Gaussian) ----------

##Specify the Pattern for Detection of the beads single channel: spim_TL{t}_Angle{a}.tif

##multi-channel: spim_TL{t}_Angle{a}_Channel1.tif were 1 is the Channel that contains the beads

channel_pattern_beads="spim_TL{tt}_Angle{a}.tif"

#channel_pattern_beads="spim_TL{tt}_Angle{a}_Channel1.tif"

##Difference of mean (Comment out Difference of Gaussian parts in registration.bsh script)

type_of_detection="\"Difference-of-Mean (Integral image based)\""

radius1="2"

radius2="3"

threshold="0.008"

##Difference of Gaussian (Comment out Differnce of Mean parts in registration.bsh script)

#type_of_detection="\"Difference-of-Gaussian\""

#initial_sigma="1.8000"

#threshold_gaussian="0.0080"

###---------- Timelapse registration ----------

timelapse_timepoint="1-3"

###---------- Dublicate Registration files (Only for multi-channel data) ----------

#Channel that contain the beads

channel_source="1"

channel_target="0"

###---------- Multi-view content based fusion ----------

##Change between Single-Channel or Mulit-channel fusion

##If single channel then comment out 2nd fusion command in fusion.bsh script

select_channel="Single-channel"

#select_channel="Multi-channel"

##Use timelapse registration or Individual registration

##For timelapse registration specify reference timepoint:

registration_fusion="\"Time-point registration (reference=1) of channel 0\""

##Individual registration:

#registration_fusion="\"Individual registration of channel 0\""

downsample_output="2"

##Cropping parameters of full resolution

x="100"

y="226"

z="355"

w="1731"

h="820"

d="755"

###---------- External transformation for multi-view deconvolution ----------

##Caution: Before applying the exteranl transformation make a copy of the registration files

##Only single channel, use external transformation for each individual channel

pattern_extrans="spim_TL{tt}_Angle{a}.tif"

#pattern_extrans="spim_TL{tt}_Angle{a}_Channel1.tif"

##timepoints:

external_transformation_timepoint="1-3"

##For downsampling 2x use 0.5

m00="0.5"

m11="0.5"

m22="0.5"

###---------- Multi-view deconvolution ----------

##Only for single channel, use deconvolution for each individual channel

pattern_deconvo="spim_TL{tt}_Angle{a}.tif"

#pattern_deconvo="spim_TL{tt}_Angle{a}_Channel1.tif"

iter="1"

##Cropping parameters: if downsampled divid fusion cropping parameters by this factor

decox="50"

decoy="113"

decoz="177"

decow="865"

decoh="410"

decod="377"

###---------- Rendering ----------

##Two different sets of scripts, one for single channel and one for multi-channel; choose:

##Working directory

jobs_rendering="/projects/pilot_spim/Christopher/pipeline/jobs_master_beta_2.0/3d_rendering_cpu"

##Working script

#rendering="/projects/pilot_spim/Christopher/pipeline/jobs_master_beta_2.0/3d_rendering_cpu/single-render-mov.bsh"

rendering="/projects/pilot_spim/Christopher/pipeline/jobs_master_beta_2.0/3d_rendering_cpu/multi-render-mov.bsh"

##source

source_rendering="/output_fusion"

##target directory

target_rendering="/rendering"

nframes="6"

##Min Max single channel

#minimum_rendering="0.6"

#maximum_rendering="0.005"

##Min Max multi channel

min_ch0=0.9

max_ch0=0.01

min_ch1=0.9

max_ch1=0.01

##For multi-channel rendering:

zSlices="369"

##Orientation or rotation

#still needs to be put into the script directly

#under construction

###---------- hdf5 export ----------

##Target directory

target_hdf5="/hdf5/"

##Number of jobs

num_export_job="`seq 0 3`"

#Path directory

path="\"/projects/pilot_spim/Christopher/Test_scripts/single-channel\""

#path="\"/projects/pilot_spim/Christopher/Test_scripts/multi-channel/channel_split\""

#Xml filename

exportXmlFilename="\"/hdf5/Test_single.xml\""

##Spim pattern

##For single channel:spim_TL{tt}_Angle{a}.tif

##For 2 channel: spim_TL{tt}_Angle{a}_Channel{c}.tif

inputFilePattern="\"spim_TL{tt}_Angle{a}.tif\""

#inputFilePattern="\"spim_TL{tt}_Angle{a}_Channel{c}.tif\""

##Channels: change for 2 channel data

channels_export="\"0\""

#channels_export="\"0,1\""

angles_export="\"1,2,3\""

timepoint_export="\"1-3\""

referencetp_export="\"1\""

filepath="\"/output_fusion/\""

filepattern="\"%1\\\$d/img_tl%1\\\$d_ch%2\\\$d_z%3\\\$03d.tif\""

export_numSlices="376"

sliceValueMin="0"

sliceValueMax="60000"

cropOffsetX="100"