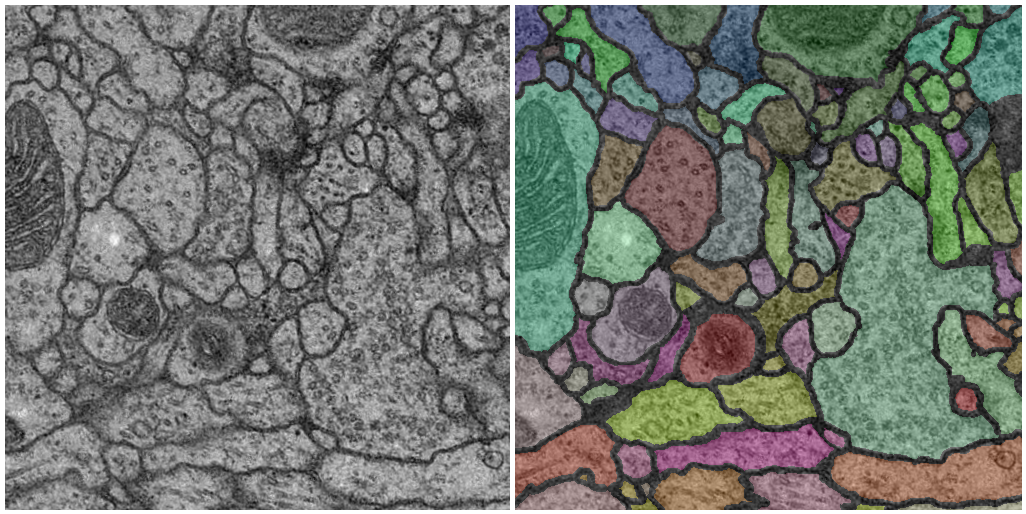

Segmentation of neuronal structures in EM stacks challenge - ISBI 2012

The images are representative of actual images in the real-world, containing some noise and small image alignment errors. None of these problems led to any difficulties in the manual labeling of each element in the image stack by an expert human neuroanatomist. The aim of the challenge is to compare and rank the different competing methods based on their pixel and object classification accuracy.

Contents

Relevant dates

- Deadline for submitting results:

February 1stMarch 1st, 2012

- Notification of the evaluation:

February 21stMarch 2nd, 2012

- Deadline for submitting abstracts:

March 1stMarch 9th, 2012

- Notification of acceptance/presentation type:

March 15thMarch 16th, 2012

The workshop competition is done but the challenge remains open for new contributions.

How to participate

Everybody can participate in the challenge. The only requirement consists of filling up the registration form here to get a user name and password to download the data and upload the results.

This challenge was part of a workshop previous to the IEEE International Symposium on Biomedical Imaging (ISBI) 2012. After the publication of the evaluation ranking, teams were invited to submit an abstract. During the workshop, participants had the opportunity to present their methods and the results were discussed.

Each team received statistics regarding their results. After the workshop, an overview article will be compiled by the organizers of the challenge, with up to three members per participating team as co-authors.

If you have any doubt regarding the challenge, please, do not hesitate to contact the organizers. There is also an open discussion group that you can join here.

Training data

The training data is a set of 30 sections from a serial section Transmission Electron Microscopy (ssTEM) data set of the Drosophila first instar larva ventral nerve cord (VNC). The microcube measures 2 x 2 x 1.5 microns approx., with a resolution of 4x4x50 nm/pixel.

The corresponding binary labels are provided in an in-out fashion, i.e. white for the pixels of segmented objects and black for the rest of pixels (which correspond mostly to membranes).

To get the training data, please, register in the challenge server, log in and go to the "Downloads" section.

This is the only data that participants are allowed to use to train their algorithms.

Test data

The contesting segmentation methods will be ranked by their performance on a test dataset, also available in the challenge server, after registration. This test data is another volume from the same Drosophila first instar larva VNC as the training dataset.

Results format

The results are expected to be uploaded in the challenge server as a 32-bit TIFF 3D image, which values between 0 (100% membrane certainty) and 1 (100% non-membrane certainty).

Evaluation metrics

In order to evaluate and rank the performances of the participant methods, we will use 2D topology-based segmentation metrics, together with the pixel error (for the sake of metric comparison). Each metric will have an updated leader-board.

The metrics are:

- Minimum Splits and Mergers Warping error, a segmentation metric that penalizes topological disagreements, in this case, the object splits and mergers.

- Foreground-restricted Rand error: defined as 1 - the maximal F-score of the foreground-restricted Rand index, a measure of similarity between two clusters or segmentations. On this version of the Rand index we exclude the zero component of the original labels (background pixels of the ground truth).

- Pixel error: defined as 1 - the maximal F-score of pixel similarity, or squared Euclidean distance between the original and the result labels.

If you want to apply these metrics yourself to your own results, you can do it within Fiji using this script.

We understand that segmentation evaluation is an ongoing and sensitive research topic, therefore we open the metrics to discussion. Please, do not hesitate to contact the organizers to discuss about the metric selection.

Organizers

- Ignacio Arganda-Carreras (Howard Hughes Medical Institute, Department of Brain and Cognitive Sciences, Massachusetts Institute of Technology, Cambridge, MA, USA)

- Sebastian Seung (Howard Hughes Medical Institute, Department of Brain and Cognitive Sciences, Massachusetts Institute of Technology, Cambridge, MA, USA)

- Template:Person:Albertcardona (Institute of Neuroinformatics, Uni/ETH Zurich, Switzerland)

- Johannes Schindelin (University of Wisconsin-Madison, WI, USA)

References

Publications about the data:

- Albert Cardona, Stephan Saalfeld, Stephan Preibisch, Benjamin Schmid, Anchi Cheng, Jim Pulokas, Pavel Tomancak and Volker Hartenstein (10, 2010), "An Integrated Micro- and Macroarchitectural Analysis of the Drosophila Brain by Computer-Assisted Serial Section Electron Microscopy", PLoS Biol (Public Library of Science) 8 (10): e1000502, doi:10.1371/journal.pbio.1000502, <http://dx.doi.org/10.1371%2Fjournal.pbio.1000502>

Publications about the metrics:

- V. Jain, B. Bollmann, M. Richardson, D.R. Berger, M.N. Helmstaedter, K.L. Briggman, W. Denk, J.B. Bowden, J.M. Mendenhall, W.C. Abraham, K.M. Harris, N. Kasthuri, K.J. Hayworth, R. Schalek, J.C. Tapia, J.W. Lichtman, S.H. Seung (2010), Boundary Learning by Optimization with Topological Constraints, IEEE Conference on Computer Vision and Pattern Recognition, pp. 2488-2495, DOI 10.1109/CVPR.2010.5539950